The BugWright2 project

Autonomous Robotic Inspection and Maintenance on Ship Hulls

Integration week in Porto

[Integration Week]

👷 For the next in-person Integration week, experts in robotics, and visualization, as well as international end users met in the beautiful city of Porto (Portugal) in June 2023, to work together on the implementation of autonomous technologies in ship inspection. We were hosted by UPORTO and APDL at the Matosinhos shipyard (north of Porto).

The objectives of this week was to bring together all the mobile agents inspecting a structure of interest: autonomous drones, magnetic crawlers, underwater system and inspector wearing augmented-reality equipments. As a results of the previous integration weeks, we had demonstrated that all agents could be localised individually, but we had not unified the localisation frame. This week in Porto was the first time we were bringing together all the components, in a unified localisation frame, in the same network infrastructure. This was also the first time all the agents could be brought together on the side of a real ship, even if it was a rather small one, the Cachao Da Valeira 🇵🇹.

UIB worked on different activities during the Integration week: Continuation with the development and integration of the different processes aiming at the implementation of the first stage of a BW2 inspection mission. This first stage aims at building the localization framework for the rest of robots and providing a first set of inspection data (comprising flight log, images, point clouds and mesh, including the positioning information for the data collected and the corresponding processing results; all this data are available for the rest of robots).

During this first stage, the inspection-oriented drone developed by the UIB operates as a standalone platform and flies in front of the structure to inspect, collecting in a fully autonomous, systematic way the inspection data. A number of processes run next in the drone ground station, in order to: (1) build the localization framework [methodology developed by the Univ. of Klagenfurt team], (2) build a mesh (to be used by the rest of robots along the BW2 inspection pipeline) and (3) detect defects in the images collected. As part of these developments and integration efforts, a number of tests in different scenarios were performed for checking the suitability of the processes that lead to the generation of the localization framework [developed by the Univ. of Klagenfurt team],

- The localization framework is based on visual markers (ARUCO tags) and an Ultra-Wide Band network

- The rest of robots involved in a BW2 inspection mission make use of the resulting calibration to plan their motion and conclude the inspection at a more detailed level.

- Continuing with the developments and integration efforts, a number of tests were performed for checking the suitability of the processes leading to building the mesh of the surface under inspection.

- The mesh, apart from being an output of an inspection mission, is intended to be useful for the rest of the robots of the inspection pipeline, as input for their motion planning modules.

- Part of the development/integration of the different elements involved in the first stage of the BW2 inspection pipeline took place in Porto, but is intended to be continued/completed in the UIB laboratory along the next months, to be tested during the next integration meetings.

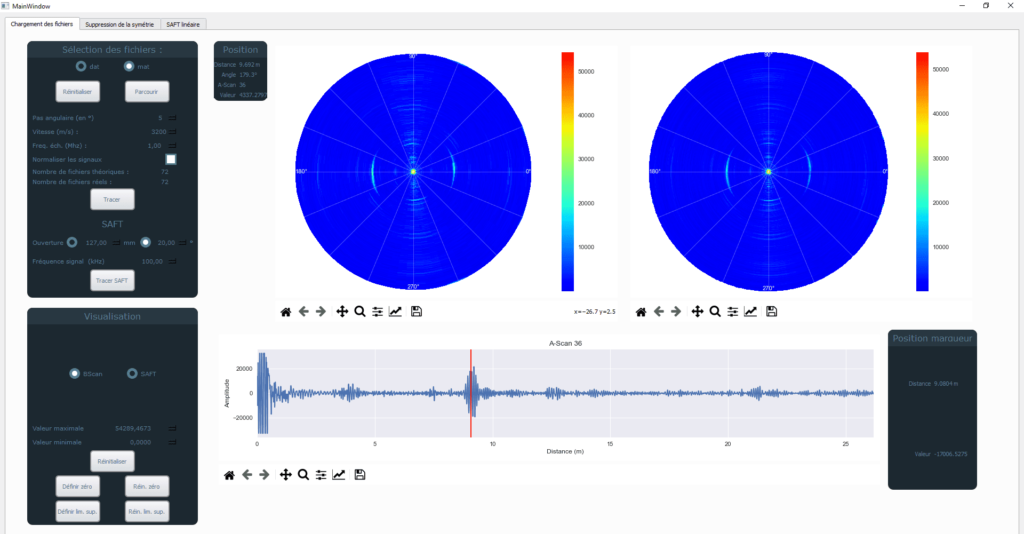

Following the measurement results on the model at CETIM, we conducted tests to confirm the relevance of the method in detecting obstacles on the ship structure. The ship provided to us has a typical structure with welded sheets and interior reinforcement with stiffeners. Using the method developed at CETIM, which is based on the propagation of ultrasonic plate wave SH0, we were able to confirm the method’s relevance in positioning on the structure, stiffeners, and in some cases, the welds. The system involves placing the ultrasonic sensor on the structure and rotating it 360 degrees while collecting signals related to the wave propagation over a distance of approximately 2 m. Then, using an image reconstruction algorithm developed at CETIM, we can obtain a map of the area to be inspected.

The inspection was done manually with the aim of automating it later, which would allow for more precise angular positioning and faster acquisition time. We were able to discuss our technology’s operation with various project partners and also explored how it could be integrated with one of the partners.

LakeSide Lab’s role in the project is to improve the efficiency of the inspection process using multi-drone systems. The tests in Porto primarily focused on ensuring the dependable flight of GPS-guided drones in proximity to the vessel. These tests were conducted at the pier, within an inflatable cage provided by INSA for safety (see picture on the right).

As a direct outcome of these tests, enhancements were made to power supply electronics, and valuable insights were gained regarding the coordinate system used for the positioning of the drones. Furthermore, LSL collaborated with RWTH to further refine the mission definition protocol.

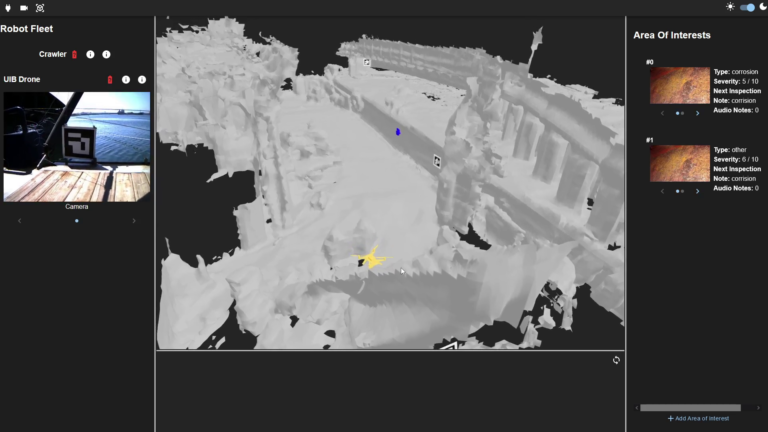

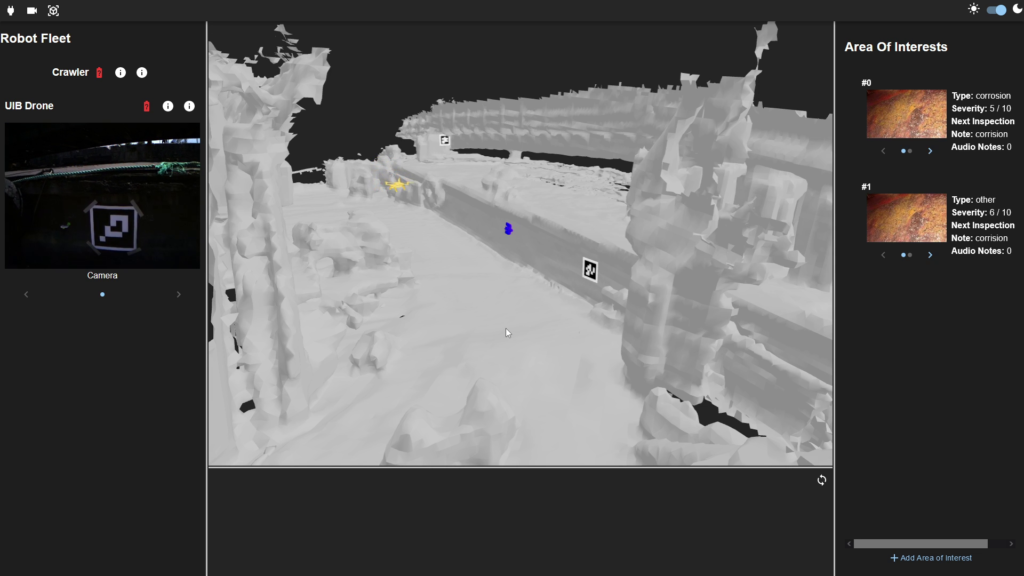

In Porto, the team from UNI-KLU tested a new robotics process to initialize all robotic platforms in a common coordinate systems. This allows all the robots to navigate in a synchronized and aligned navigation environment. The first step includes, a reconnaissance flight using UIB’s UAV to collect measurements of all the UWB modules and visual fiducial markers (ArUco tags). The data collected, was then used to create a map of all sensors attached to the ship hull. In a second step a synchronized inspection of the robots is performed, and the synchronized navigation environment is visualized live using the VR technology developed by RWTH.

From the RWTH, Simon, Torsten and Sebastian were present and worked together with Nathalie, Jan and Thomas from Trier Univeristy on further iterations of the user interface. For this, Simon worked on data integration from other partners, while Sebastian worked on the ArUco marker detection of the HoloLens2. This work was combined on the last two days where data was collected in visualized live on the ship. During the week Jan, Nathalie and Thomas conducted interviews about the user interface designs.

Live visualization of the drone from UIB in yellow and the crawler from CNRS in blue. The drone provides a live camera feed,

visible on the top left, while some example areas of interest are listed on the right.

CNRS was involved in the process to ensure the robot was accurately positioned on the ship using mesh and UWB anchors. CNRS used the crawler to perform horizontal inspection tasks on the ship.

For NTNU, the goal of the Integration Week was to continue testing the integration of the sonar in the inspection pipeline, to provide more robust and accurate models of the inspection point of interests. Additionally, experiments were conducted to synchronise the robotic platform into a common working frame. Valuable data was collected and enabled further refinement of the proposed methods.

In this context, University of Trier (Germany) as industrial and organizational psychologists were particularly interested in issues related to the changing competence requirements due to the use of autonomous robotics and topics such as technology acceptance or usability.

The Consortium would like to thanks UPORTO and APDL for their welcome, support and help during the week.

#h2020 #euproject #integrationweek #fieldtests #research #euproject #dronetechnology #innovation #testing #data #robots #augmentedreality